Kelin Yu

Email: kyu85 [at] umd [dot] edu

CV

Google Scholar

Twitter/X

Github

I am a second-year Ph.D. student in Computer Science at University of Maryland, where I work with

Prof. Ruohan Gao. I also closely work with Prof. Yiannis Aloimonos and Prof. Pratap Tokekar.

I am interested in MultiSensory Robot Learning, Learning from Cross-embodiment Human Videos, and Robot Manipulation.

I received my master's degree in Computer Science at Georgia Tech in May 2024, advised by

Ph.D. mentor Yunhai Han,

Prof. Ye Zhao and

Prof. Danfei Xu.

Previously, I obtained dual bachelor's degree in Electrical Engineering and Mathematics from Georgia Tech in 2022 and

was a Robotics Software Engineering intern at Amazon.

I'm open to discussions and collaborations, so feel free to drop me an email if you are interested.

News

- 2025/10 Invited talk at Shanghai Jiaotong University

- 2025/9 Control is accepted by ICCV CDEL Workshop with Oral Presentation — link

- 2025/6 GenFlowRL is accepted by ICCV 2025 — link

- 2025/5 GenFlowRL is presented at ICRA FMNS Workshop with Spotlight and Best Paper Nomination — link

- 2025/4 Invited talk at UMD Robotics Symposium — link

- 2024/8 Graduated from Georgia Tech and started my Ph.D. at UMD with Prof. Ruohan Gao

- 2024/8 MimicTouch accepted by CoRL 2024 — link

- 2024/4 One paper published at IEEE T-Mech — link

- 2023/12 MimicTouch won the Best Paper at NeurIPS TouchProcessing Workshop — link

- 2023/12 Invited oral talk at NeurIPS TouchProcessing Workshop — link

Mentored Students

- Dongyu Luo — Undergraduate, HKU, 2025–

- Haode Zhang — Undergraduate, SJTU, 2025–

External Collaborators (Past & Present)

- Yunhai Han — GT Robotics Ph.D. in Prof. Harish Ravichandar's Lab

- Sheng Zhang — UMD CS Ph.D. in Prof. Heng Huang's Lab

- Amir-Hossein Shahidzadeh — UMD CS Ph.D. in Prof. Yiannis Aloimonos's Lab

- Shuo Cheng — GT CS Ph.D. in Prof. Danfei Xu's Lab

- Qixian Wang — UIUC ME Ph.D. in Prof. Mattia Gazzola's Lab

- Vaibhav Saxena — GT CS Ph.D. in Prof. Danfei Xu's Lab

Publications

🟨 Highlighted entries are representative works

(* indicates equal contribution)

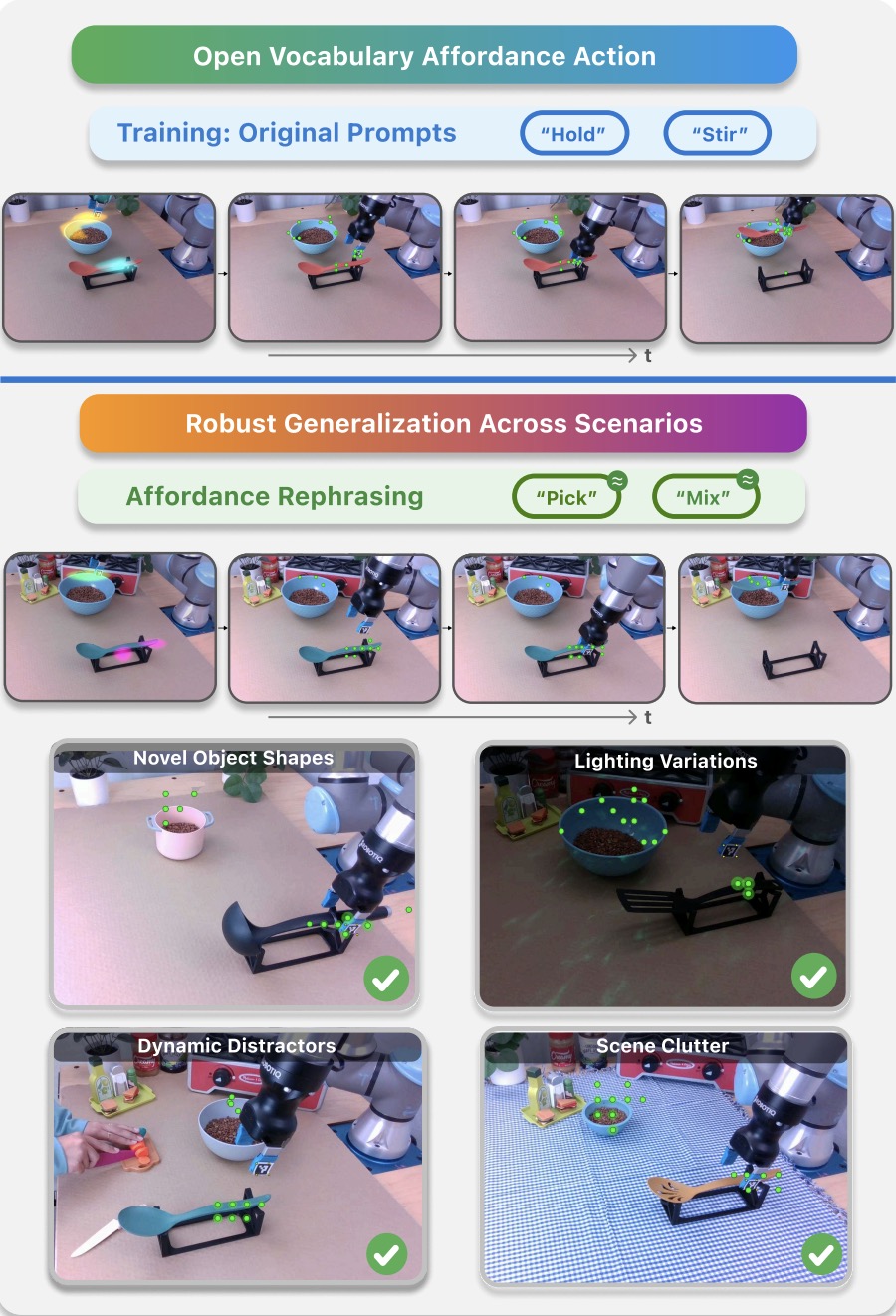

Afford2Act: Affordance-Guided Automatic Keypoint Selection for Generalizable and Lightweight Robotic Manipulation

Anukriti Singh, Kasra Torshizi, Khuzema Habib, Kelin Yu, Ruohan Gao, Pratap Tokekar

AFFORD2ACT, an affordance-guided framework that distills a minimal set of semantic 2D keypoints from a text prompt and a single image. AFFORD2ACT follows a three-stage pipeline: affordance filtering, category-level keypoint construction, and transformer-based policy learning with embedded gating to reason about the most relevant keypoints, yielding a compact 38-dimensional state policy that can be trained in 15 minutes, which performs well in real-time without proprioception or dense representations.

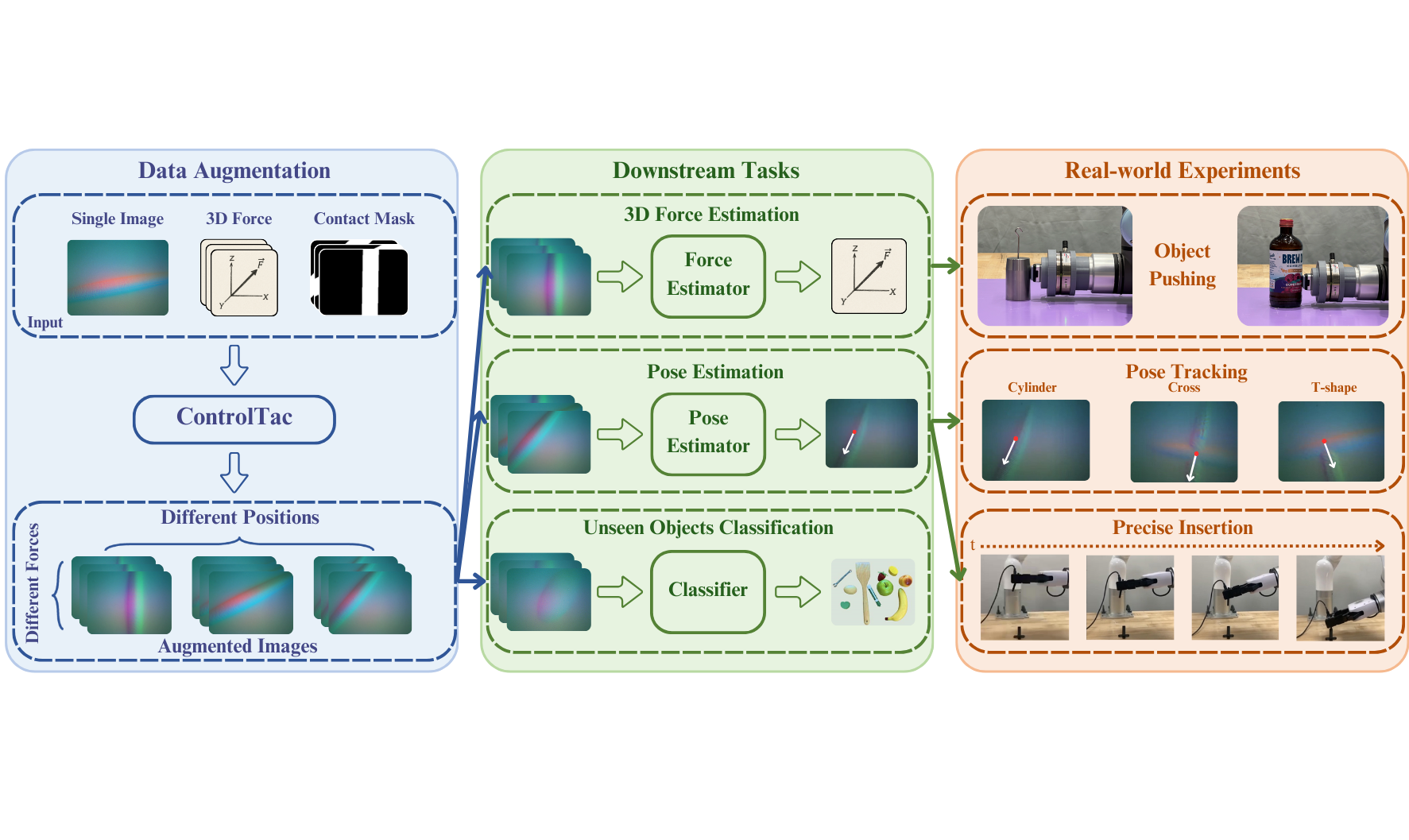

ControlTac: Force- and Position-Controlled Tactile Data Augmentation with a Single Reference Image

Dongyu Luo*, Kelin Yu*, Amir-Hossein Shahidzadeh, Cornelia Fermuler, Yiannis Aloimonos, Ruohan Gao, (Dongyu is my undergraduate mentee)

ICCV CDEL Workshop 2025 ( Oral )

Paper /

Website /

In Submission

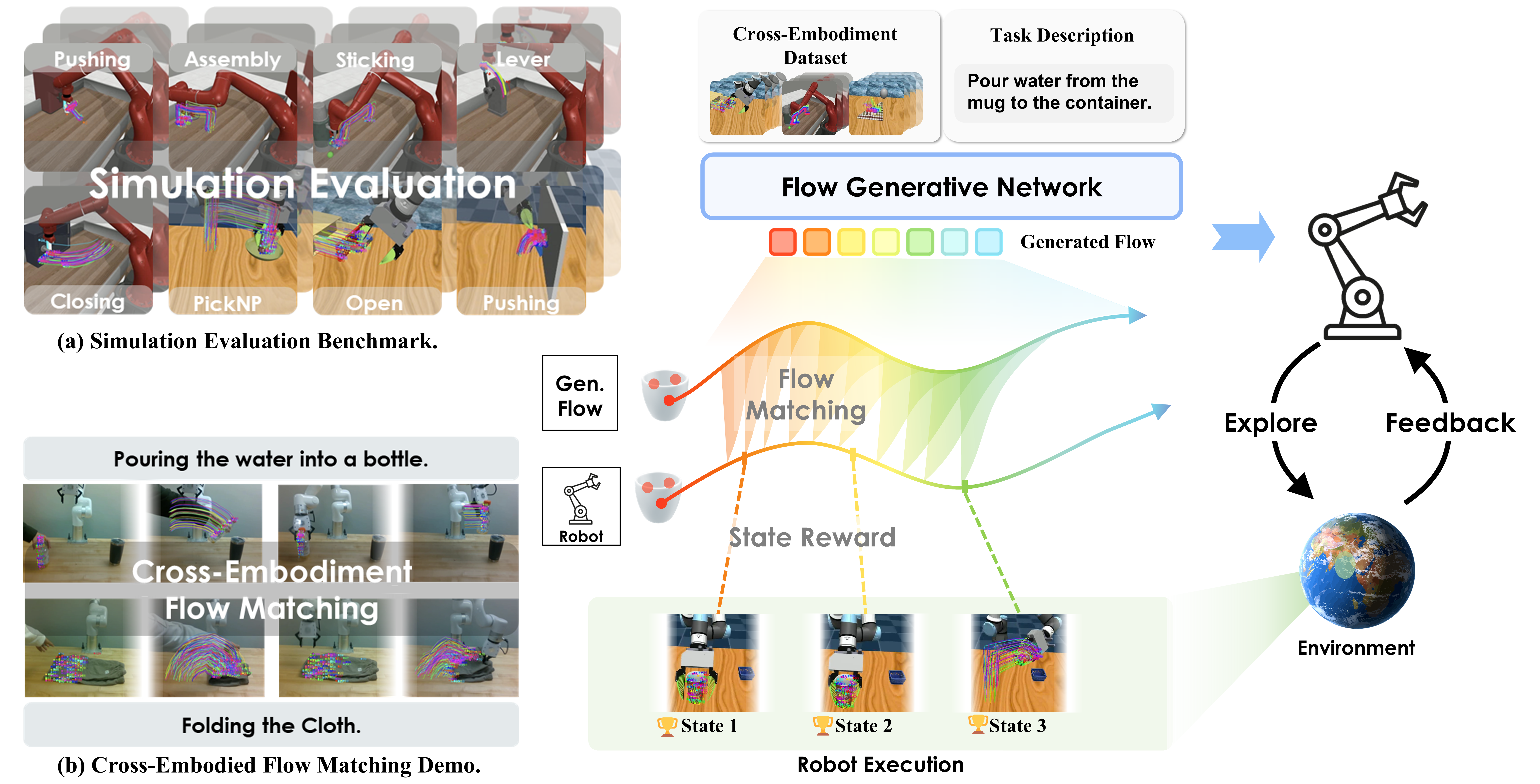

GenFlowRL: Shaping Rewards with Generative Object-Centric Flow in Visual Reinforcement Learning

Kelin Yu*, Sheng Zhang*, Harshit Soora, Furong Huang, Heng Huang, Pratap Tokekar, Ruohan Gao

ICCV 2025

ICRA FMNS Workshop 2025 ( Spotlight, Best Paper Nomination )

Paper /

Website /

Video

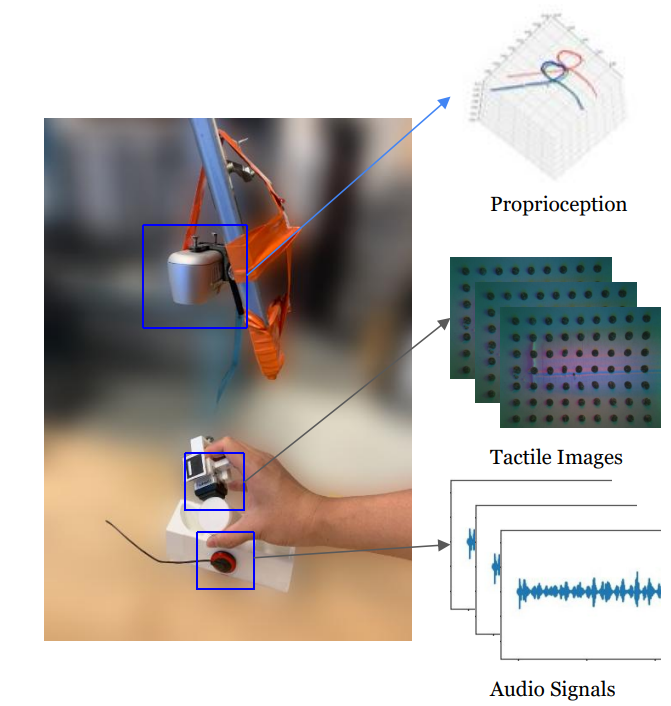

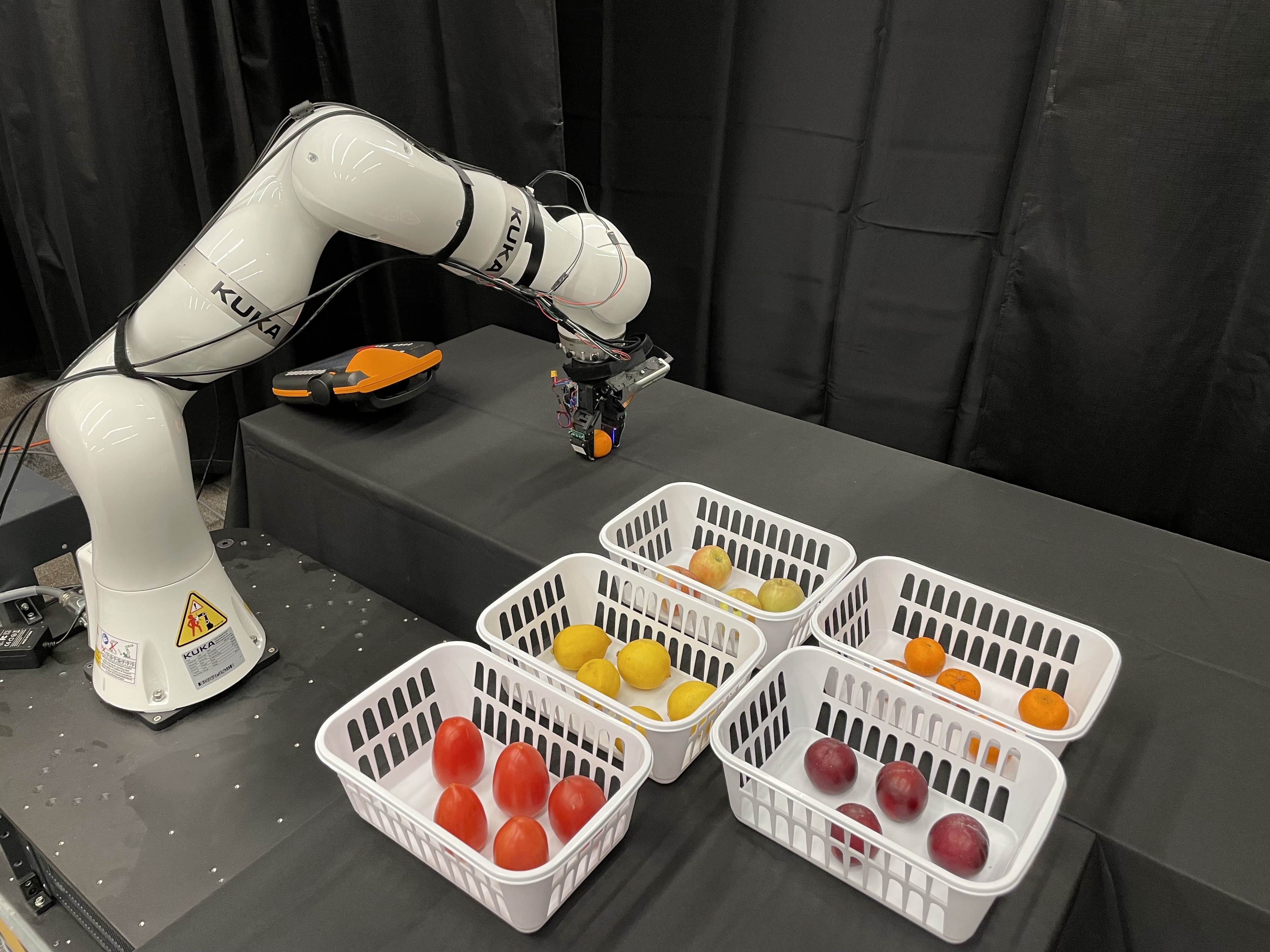

MimicTouch: Leveraging Multi-modal Human Tactile Demonstrations for Contact-rich Manipulation

Kelin Yu*, Yunhai Han*, Qixian Wang, Vaibhav Saxena, Danfei Xu, Ye Zhao

CoRL 2024

NeurIPS Touch Processing Workshop 2023 ( Best Paper Award )

CoRL Deployable Workshop 2023

Paper /

Website /

Video

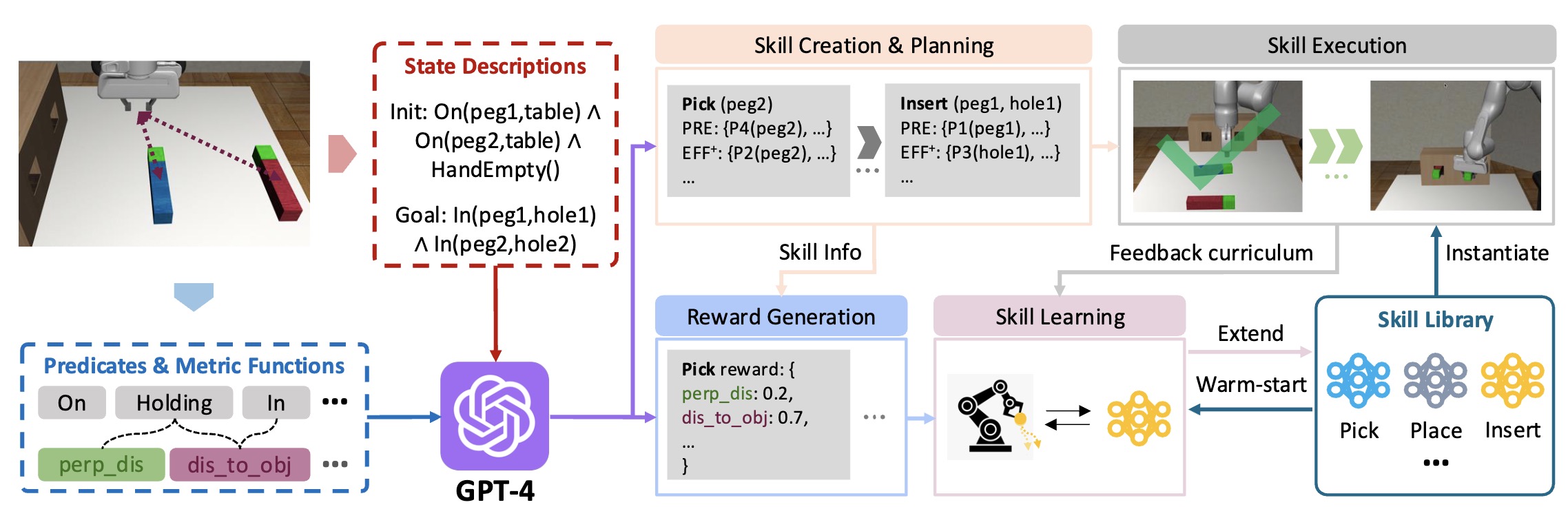

Continual Robot Learning via Language-Guided Skill Acquisition

Zhaoyi Li*, Kelin Yu*, Shuo Cheng*, Danfei Xu

ICLR AGI Workshop 2024 / ICLR LLMAgent Workshop 2024 / Website / Under Review by RA-L

We developed LG-SAIL (Language Models Guided Sequential, Adaptive, and Incremental Robot Learning), a framework that leverages Large Language Models (LLMs) to harmoniously integrate TAMP and DRL for continuous skill learning in long-horizon tasks. Our framework achieves automatic task decomposition, operator creation, and dense reward generation for efficiently acquiring the desired skills.

Learning Generalizable Vision-Tactile Robotic Grasping Strategy for Deformable Objects via Transformer

Yunhai Han*, Kelin Yu*, Rahul Batra, Nathan Boyd, Chaitanya Mehta, Tuo Zhao, Yu She, Seth Hutchinson, Ye Zhao

Transactions on Mechatronics 2024 (Long Version)

AIM 2023 (Short Version)

Paper /

Code

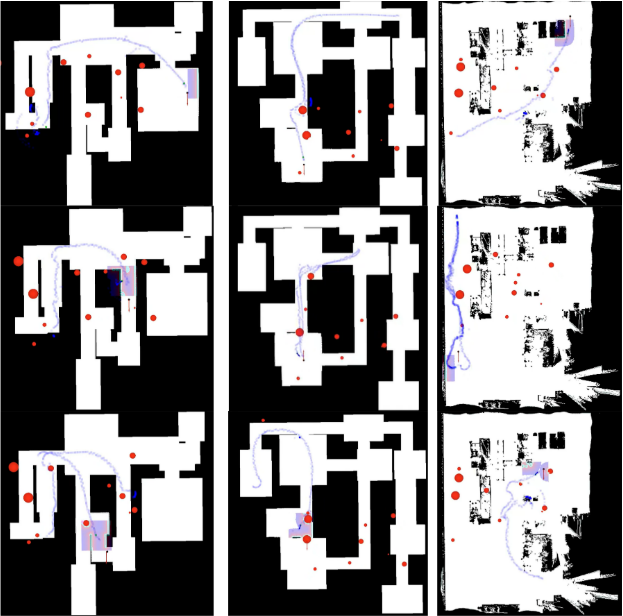

Evolutionary Curriculum Training for DRL-Based Navigation Systems

Kelin Yu*, Max Asselmeier*, Zhaoyi Li*, Danfei Xu

RSS MultiAct Workshop 2023 / Website / Paper /

We introduce a novel approach called evolutionary curriculum training to tackle these challenges. The primary goal of evolutionary curriculum training is to evaluate the collision avoidance model's competency in various scenarios and create curricula to enhance its insufficient skills. The paper introduces an innovative evaluation technique to assess the DRL model's performance in navigating structured maps and avoiding dynamic obstacles. Additionally, an evolutionary training environment generates all the curriculum to improve the DRL model's inadequate skills tested in the previous evaluation. We benchmark the performance of our model across five structured environments to validate the hypothesis that this evolutionary training environment leads to a higher success rate and a lower average number of collisions.

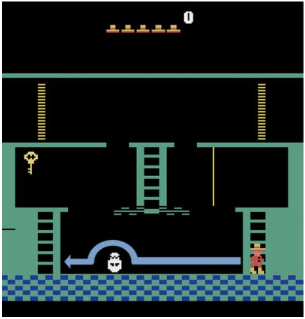

Temporal Video-Language Alignment Network for Reward Shaping in Reinforcement Learning

Kelin Yu*, Ziyuan Cao*, Reshma Ramachandra*

Technical Report 2022 / Paper /

Designing appropriate reward functions for Reinforcement Learning (RL) approaches has been a significant problem. Utilizing natural language instructions to provide intermediate rewards to RL agents in a process known as reward shaping can help the agent in reaching the goal state faster. In this work, we propose a natural language-based reward-shaping approach that maps trajectories from Montezuma's Revenge game environment to corresponding natural language instructions using an extension of the LanguagE-Action Reward Network (LEARN) framework. These trajectory-language mappings are further used to generate intermediate rewards which are integrated into reward functions that can be utilized to learn an optimal policy for any standard RL algorithms.Work Experience

Robotics Software Engineering intern

Amazon Robotics AI /

Designed and built Calibration Drift Detector for our Industrial manipulator with Python, Open3D, OpenCV, and machine learning classifier.

Each multi-pick detected as a single pick costs 12$, and MEP/DEP package costs $0.05. My system saves potential thousands of dollars every day.

Used AWS tool (S3) to get past images and point clouds and implemented advanced computer vision algorithms and applied ML classifier to detect calibration drift.

Projects

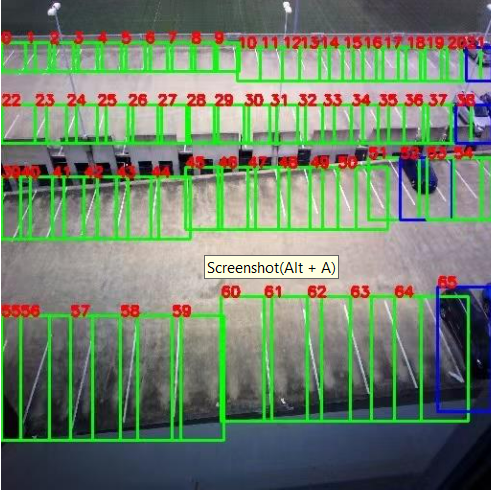

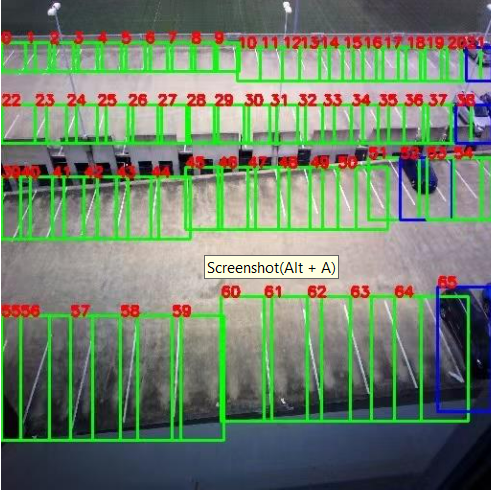

iValet An Intelligent Parking Lot Management System and Interface

Kelin Yu*,

Faiza Yousuf*,

Wei Xiong Toh*,

Yunchu Feng*,

Senior Design 2022 /

Website /

The iValet intelligent parking lot management system automatically directs drivers to the nearest vacant parking spot upon entering a crowded parking lot. The system consists of a camera, machine learning development board, a PostgreSQL server, and a user interface (web application). The camera is used to take photos of the entire parking lot, the development board runs segmentation and classification algorithms on those photos, the SQL server contains data about each parking spot that is written to by the image processing models, a path-planning algorithm, and the UI, while the web application shows end users the directions to the empty parking spots based on the location of the parking lot entrance.

Awards

Professional Service

ICLR 2025, 2026,

Reviewer

RA-L,

Reviewer

ICCV 2025,

Reviewer

NeurIPS 2024, 2025,

Reviewer

RSS 2025,

Reviewer

ICML 2025,

Reviewer

ICRA 2025,

Reviewer

CoRL Deployable Workshop, 2023,

Reviewer

Teaching Experience

CMSC 848M: Multimodal Computer Vision, University of Maryland,

Spring 2025

CMSC 421, Introduction to Artificial Intelligence, University of Maryland,

Fall 2024

CS 4476/6476, Computer Vision, Georgia Tech,

Spring 2024, Fall 2023, Spring 2023, Fall 2022

ECE 3741, Instrumentation and Electronics Laboratory, Georgia Tech,

Spring 2021

Robotics Software Engineering intern

Amazon Robotics AI /

Designed and built Calibration Drift Detector for our Industrial manipulator with Python, Open3D, OpenCV, and machine learning classifier. Each multi-pick detected as a single pick costs 12$, and MEP/DEP package costs $0.05. My system saves potential thousands of dollars every day. Used AWS tool (S3) to get past images and point clouds and implemented advanced computer vision algorithms and applied ML classifier to detect calibration drift.

iValet An Intelligent Parking Lot Management System and Interface

Kelin Yu*, Faiza Yousuf*, Wei Xiong Toh*, Yunchu Feng*,

Senior Design 2022 / Website /

The iValet intelligent parking lot management system automatically directs drivers to the nearest vacant parking spot upon entering a crowded parking lot. The system consists of a camera, machine learning development board, a PostgreSQL server, and a user interface (web application). The camera is used to take photos of the entire parking lot, the development board runs segmentation and classification algorithms on those photos, the SQL server contains data about each parking spot that is written to by the image processing models, a path-planning algorithm, and the UI, while the web application shows end users the directions to the empty parking spots based on the location of the parking lot entrance.